Does your mobile phone provide better quality than a typical webcam? I'll test some virtual webcam software for mobile.

How accurate is NLP?

We put a few of the NLP engines and associated SaaS transcription services to a quick test to find out how good they are.

Update 03/05/2018 to include 2 additional companies

NLP or Natural Language Processing is not new having started in the 1950’s. NLP often finds itself in the orbit of other similar 3-letter acronym technology such as TTS (Text-to-speech), STT (Speech to Text) and ASR (Automatic Speech Recognition). For this article we’ll look at text-to-speech engines.

But how well does the machine understand you? Google nearly a year ago announced that they had achieved a 95% accuracy rating effectively matching the recognition of another human. Google Home users can easily attest how accurate the little device is in understanding the intent of your request.

With Google Home short utterances, such as “ok google what do you think the weather in san francisco will be today?“. Google Home needs to be just smart enough to know I’m asking about keywords “weather, san francisco, today”. But the question I’m asking is how accurate did Google understand what I was saying?

With all the talk about NLP, services have emerged which take audio and turn it into readable text. Transcription is not new, for years you could send an audio file in and have a human perform the work. This human work was expensive with prices from $0.65 to $3.00 per minute of audio.

These new transcription services offer to be less expensive (< $0.10 per minute) and use online tools to help you manually clean up the text. The transcription services are likely using engines from the Big 4 cloud providers (Google, Microsoft, IBM, AWS). So let’s put them to the test.

We extracted an audio .mp3 from the first minute of the recent Kranky Geek event and pushed it via various services. The professionally recorded audio is a single speaker (Chad Hart). While this is a single sample for this blog article, we tried various other samples and got similar results. Then, we experimented with an accented speaker and the near impossible task of identifying multiple speakers on the audio.

No service delivered 95% accuracy without some manual clean up (more on that later). So if you were looking for miracle, you may have to wait a bit longer. Of the API services, Google clearly won with the highest level of accuracy and the fastest processing time.

Let’s get to the meat, here is the YouTube video and the results!

Human Transcription

Google Speech API (click to expand)

Microsoft Speech API

IBM Watson

If you watch the video and then read my manual transcript, you suddenly realize most of us don’t speak in such a way that we can easily be read back. It quickly becomes evident that we humans fill in a lot of missing pieces when listening.

While Google and Microsoft produced nearly the same results. The Google API blazed through the 1 minute audio clip in 19 seconds! Microsoft was twice that clocking in at a leisurely 40 seconds. IBM was in the middle of the pack.

From a developer perspective (subjective), Google’s API is the easiest to work with for this simple test (though you had to wrap your head around how Google Cloud works). Microsoft had scant documentation leaving us to wing it mostly. IBM was fairly straight forward. Microsoft caps your audio segments to 10 minutes per file (Google supports 180 minutes).

We pulled the machine generated closed captioning from YouTube thinking they would be identical to that of the Google Cloud API. While similar, they weren’t identical with some vary minor changes. Meaning Google internally may be using a slightly different version of their own Speech API.

While the first :15 seconds (samples above) were quite good, things fell apart after that with the transcripts becoming increasingly difficult to read.

One of the better services transcriptions was done by Voicebase, here is their full one minute translation:

Voicebase (complete transcript)

to kick things off let me just make a couple introductions My name is Chad Hart some of you many seen Webre see hacks blog help out with also like to introduce Chris Crocker otherwise known as cranky he’s a cranky and cranky left the back in the back Chris say hi I suspect your hair is hard to mess I’m sure you hear him all right

and my colleague over here so I who you hear of a lot more of throughout the day you’ve probably seen his blog blog me

if you’re on the Twitter web or see live as our hashtags.

We also experimented with the following SaaS service: Temi, Sonix, SimonSays, Remeeting and Voicebase.

These SaaS services allow a non-techie to upload a file and get a translation and then edit them manually to correct the computer generated translations. We saw some similar results meaning they are most likely using the same NLP engine. All of these SaaS services (even if they’re using an API provider are doing post processing to clean up and make the transcript more readable).

It took about 1 minute of manual work to clean up the 1 minute of automatic transcription.

Summary

Voicebase – have been at this for a while and appear to be using their own NLP engine. They are focused on speech analytics and looking for (counting) keywords in translations. Voicebase also offers human transcriptions as well.

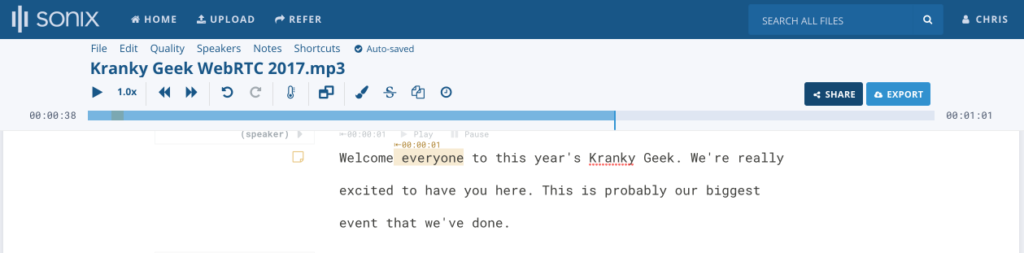

Sonix – provides automated transcriptions and had a “movie” script type editing window that I personally liked. It was clean and easy to edit.

Temi – is similar to Sonix, though the interface looked cleaner it wasn’t as easy to get used to as Sonix, however this is a matter of personal preference. Temi attempts to detect multiple speakers (though we found all lacking in their capabilities here).

Simonsays – had translations identical to Temi and Sonix; however, the user interface wasn’t as feature rich.

Remeeting – had the most unique translation (not the same mistakes as others had) leading us to believe they were using their own engine or severely tweaking someone’s else. It’s just starting out and the editing window was promising and they capture analytics as well. Their claim is ability to do speaker recognition and create associated transcripts. However, in our testing (with a multi-party speaking sample), it got throughly confused.

Speechmatics – this UK based company uniquely supports a variety of languages (beyond English) and the transcript errors it made were fairly unique (leading me to think they had their own ASR engine). There is no online editing capabilities but you can download the transcript as pretty text, plain text or json.

Otter.ai – this is beta and currently free to use. It’s transcripts were reasonable though their editing tool was a little clunky.

Summary

There is promise here but perhaps we’re still quite early in the game. Here were my key takeaways:

- For the moment you can expect to do a fair bit of manual clean-up to get something that is readable from a machine generated transcript.

- How to contextually search these transcripts remains elusive. We’re working on a sentiment analysis posting that will carry this further. Searching and finding key topics is not super easy.

- Accuracy remains problematic for the machine translations for things that might be mission critical in the areas of healthcare or legal matters.

- If you simply need to get a head start on transcribing an audio segment, these inexpensive SaaS services are a great start, however you’ll still need to invest some manual time in clean-up.

Note: AWS announced their new Transcribe service however this remains in private beta and we were unable to test. Twilio also has a transcription service but this is more for short utterances rather than long dialogs.